4.5.2. Individual Evaluation

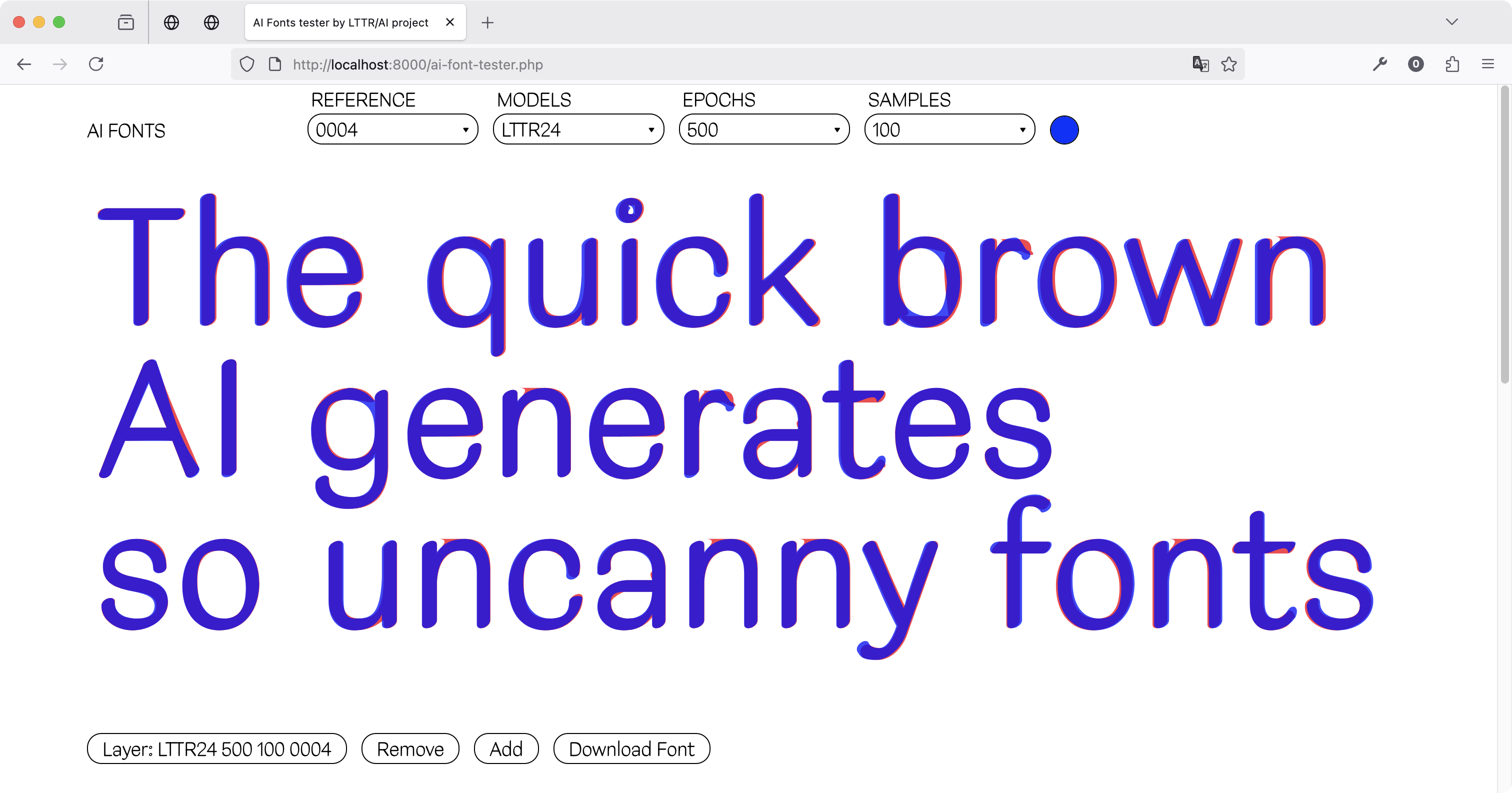

LTTR24 Base Model

The model’s variant, trained on 500 epochs, provided the

best results on all three efforts at aesthetics heuristics. The version

on 300 epochs performed slightly better results than on 600

epochs on detail precision and alphabet consistency.

The number of generated samples has not produced significantly different results across all the epoch variants.

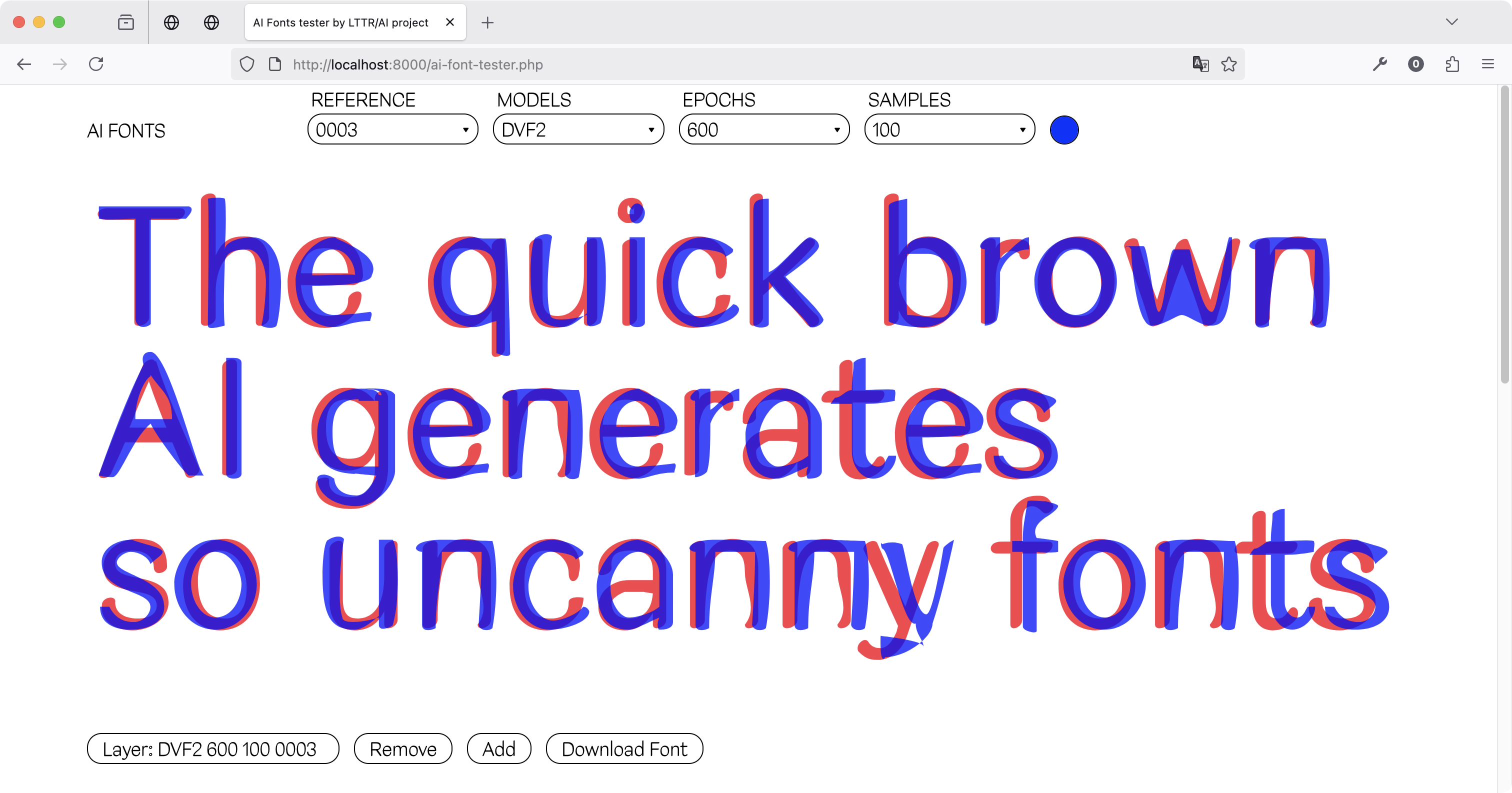

DeepVecFont-2 Original Model

In the experiment, only one model’s version was tested. Therefore, training effort results were not evaluated.

From the inference effort perspective, the Reference matching

heuristic does seem to provide some difference, where 50

and 100 generated samples provided better results than

20 and 30 generated samples. However, this

difference could be questionable.

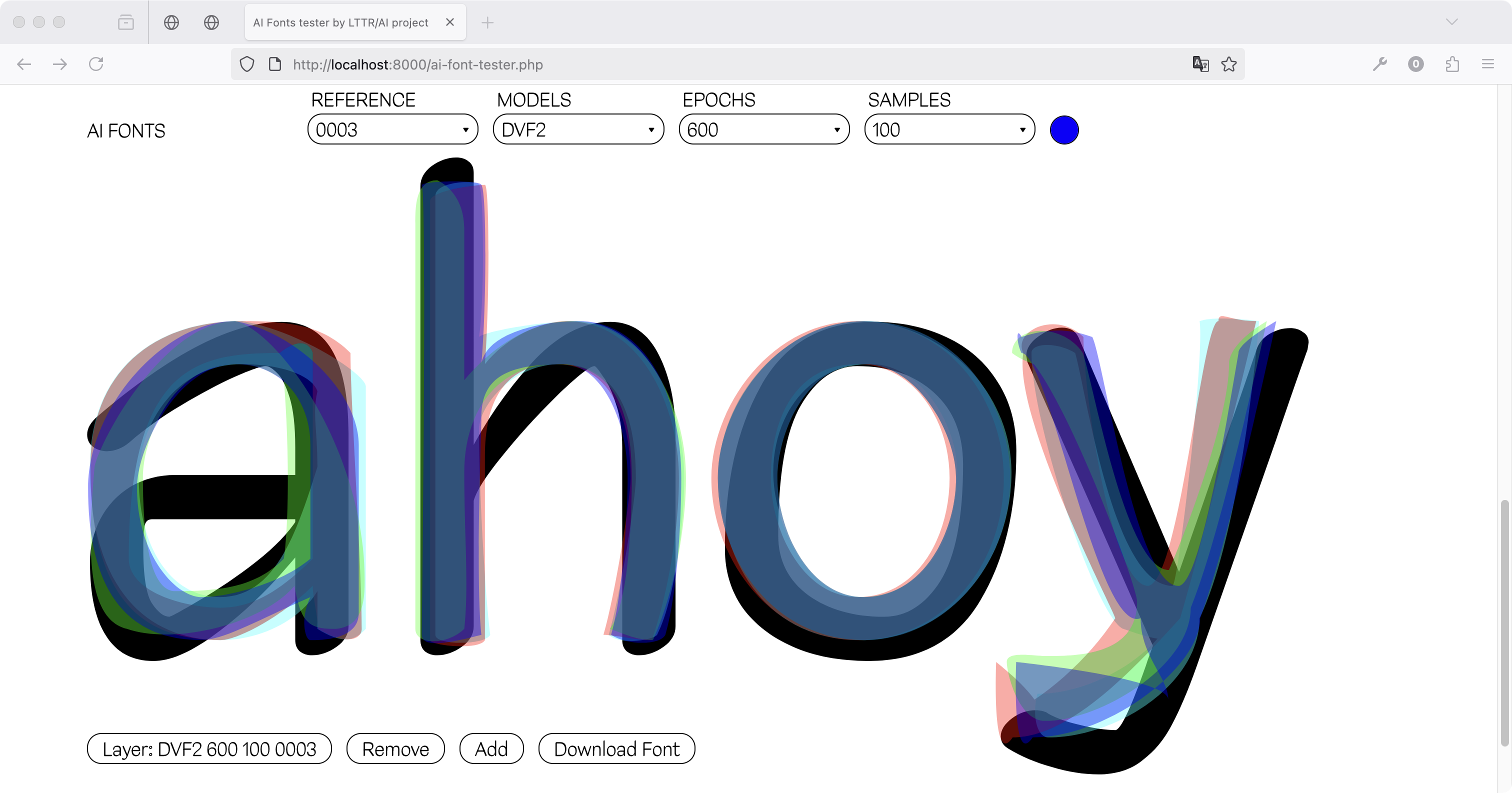

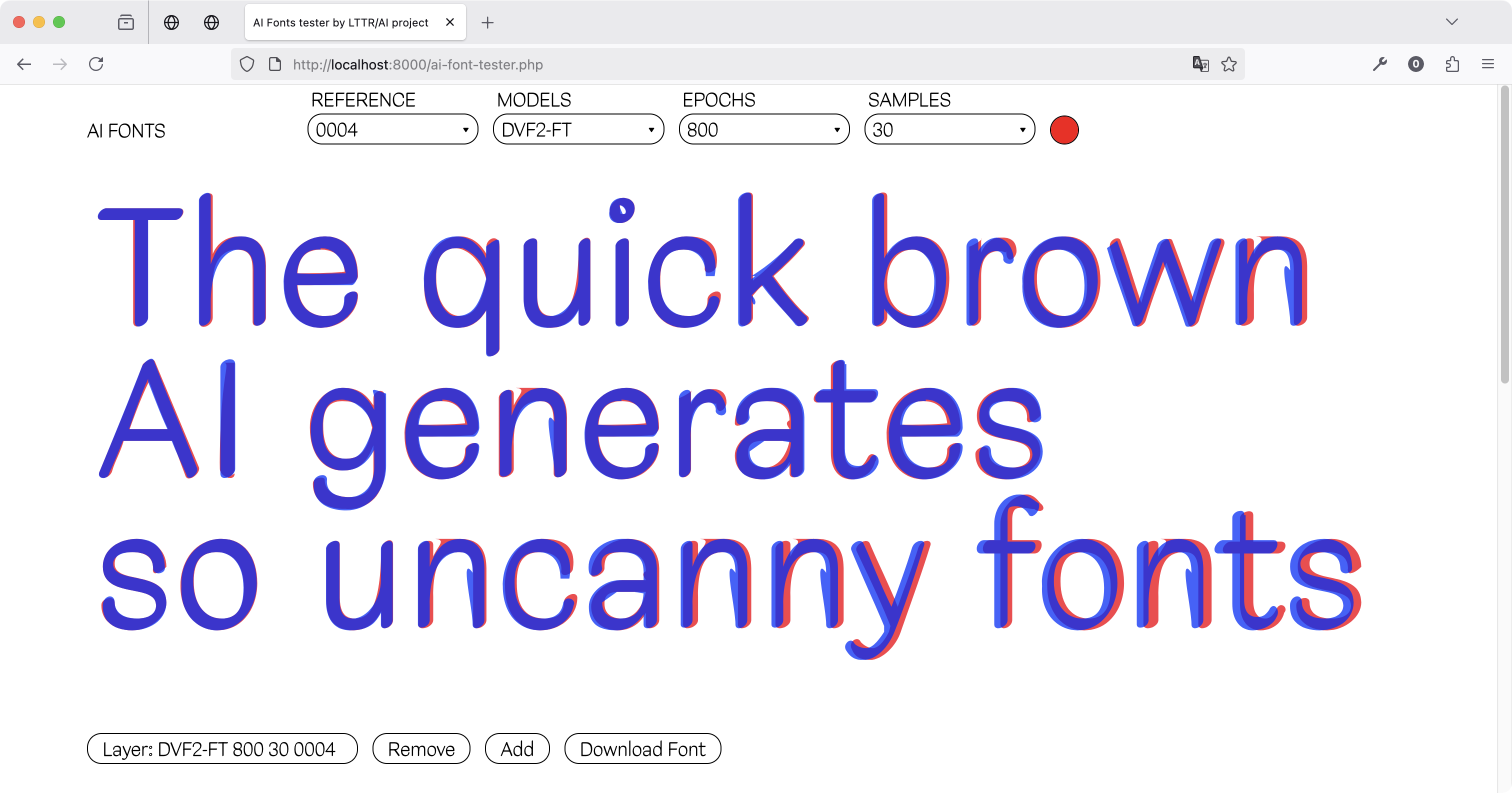

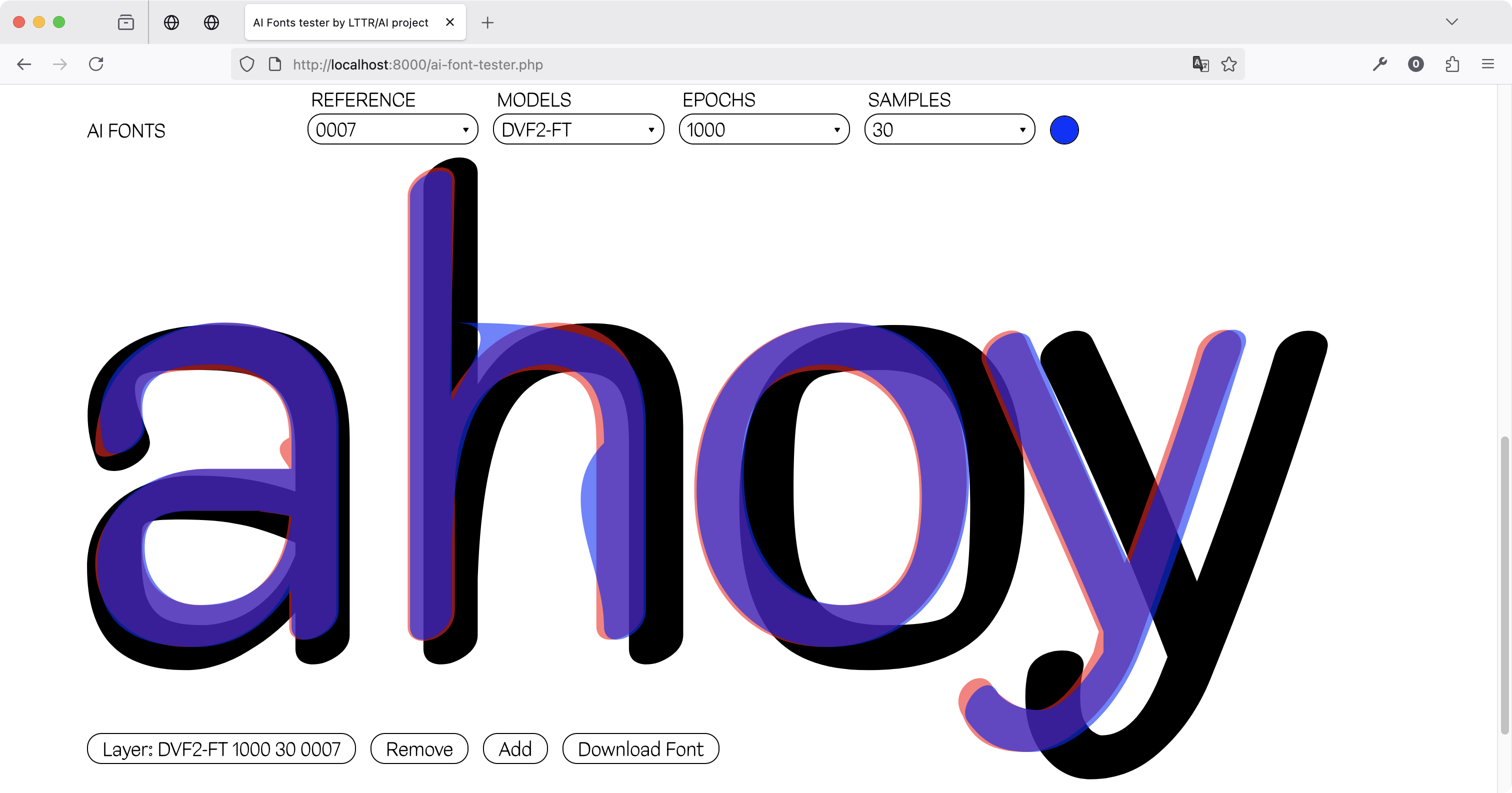

DeepVecFont-2 Fine-tuned on the LTTRSET Dataset

In the detail precision criterion, the model’s variant trained on

800 epochs performed slightly better results than the

1000 epochs variant. However, the difference is not

significant and can be prone to subjective judgement bias.

The reference matching performance seems to be for both variants seem to be similar. In the alphabet consistency criterion, the 1000 epochs variant achieved slightly better results.

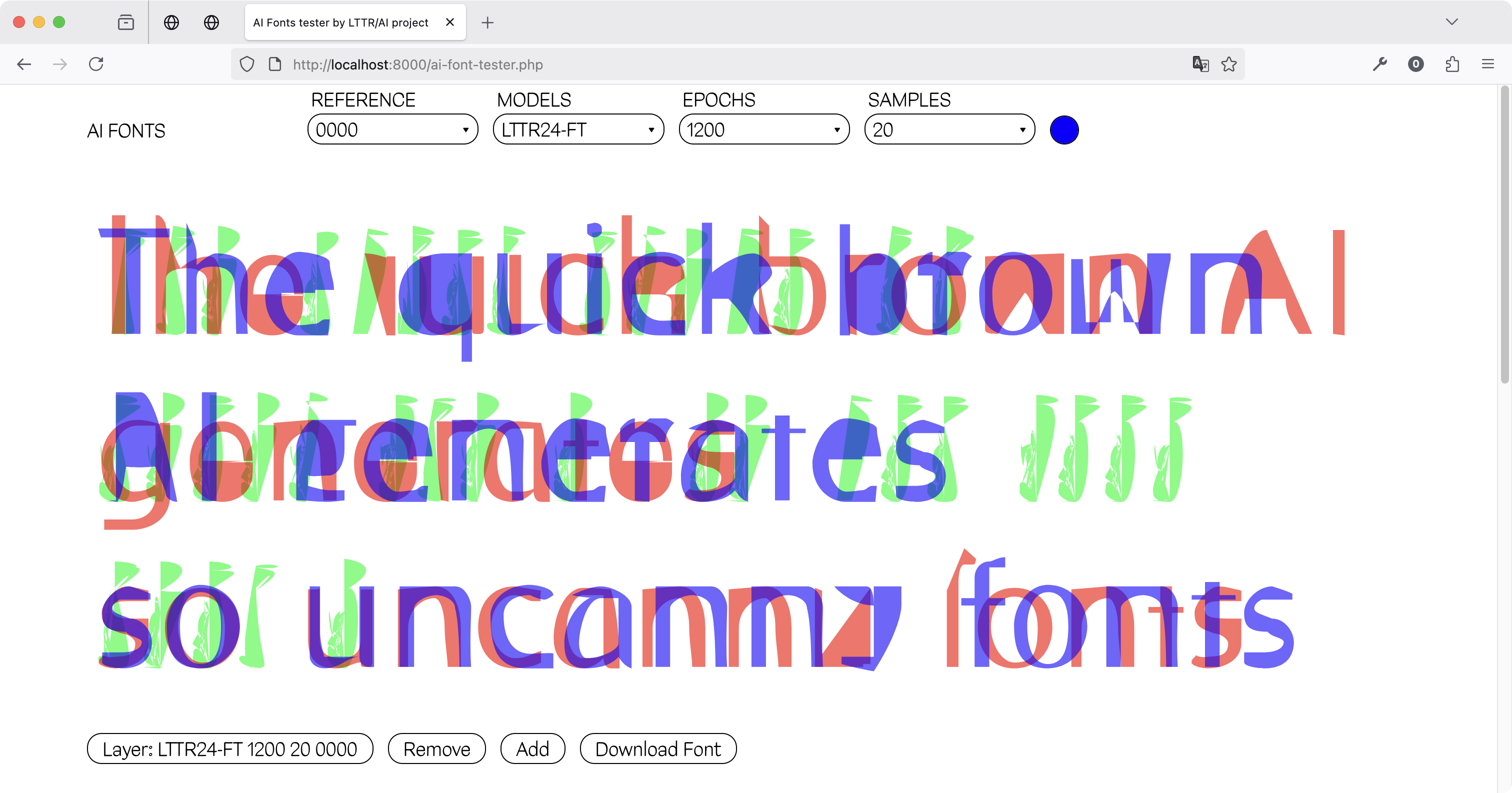

LTTR24 Fine-tuned on the SVG-Font Dataset

The model’s variant, trained on 1100 epochs, provided

the best results on all three efforts at aesthetics heuristics. The

version on the 1200 epochs performed slightly worse

results. The 800 and 1000 epochs variant

generated strange images that don’t even look like letters.

The number of generated samples has not produced significantly different results across all the epoch variants.