3.1.2. Font Completion

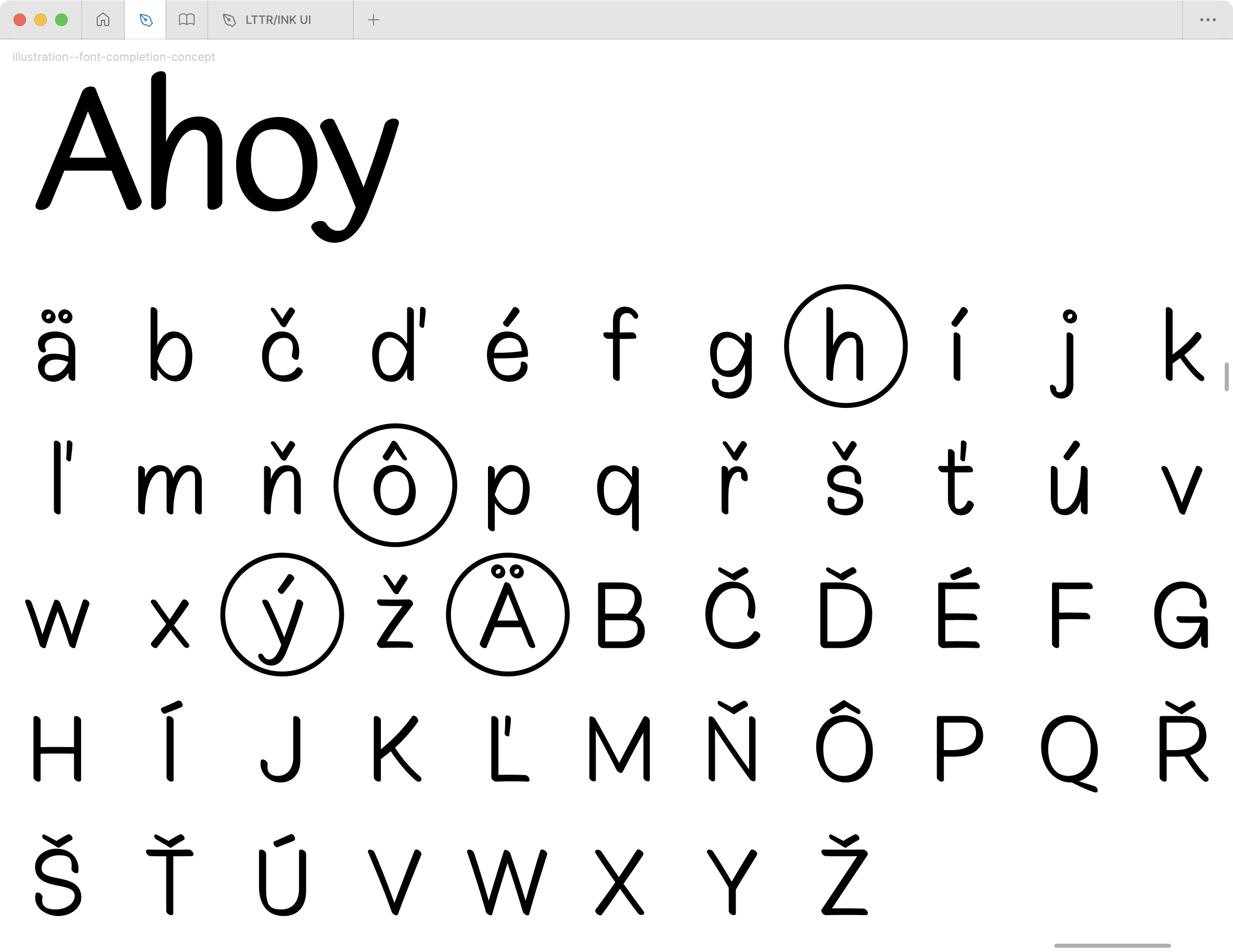

Extending the font family to other languages is a common task for a big brand to localise its visual communication. One machine learning attempt aims to help with this task. Font completion, also known as few-shot font generation or font style transfer, aims to complete a whole font alphabet using only a few reference glyphs.

The literature database reveals an early attempt from 2010 that presents a morphable template model (Suveeranont and Igarashi 2010). This model completes fonts from user sketches through an intriguing process: it analyses a single letter sketch – though the system could potentially accommodate more – to determine its relative position between font masters. This positioning then serves as the foundation for generating the complete character set.

The approach, while clever in its simplicity, operates within the constraints of pure calculation. Like Alice’s looking glass, it can only reflect what exists within its predetermined boundaries, interpolating or extrapolating from its limited system. However, for typographic workhorse fonts that prioritise clarity over creative flourishes, this constraint may prove surprisingly sufficient.

The model employs a weighting system that necessitates resampling for computational compatibility. Its methodology shares theoretical underpinnings with both manifold learning (Campbell and Kautz 2014) and variable fonts technology (Thottingal, n.d.). Rather than embracing the more recent deep learning paradigms, it maintains a parametric approach, applying direct algorithmic computations to the data – a choice that, while perhaps unfashionable in today’s neural network-obsessed world, retains its own elegant efficiency.

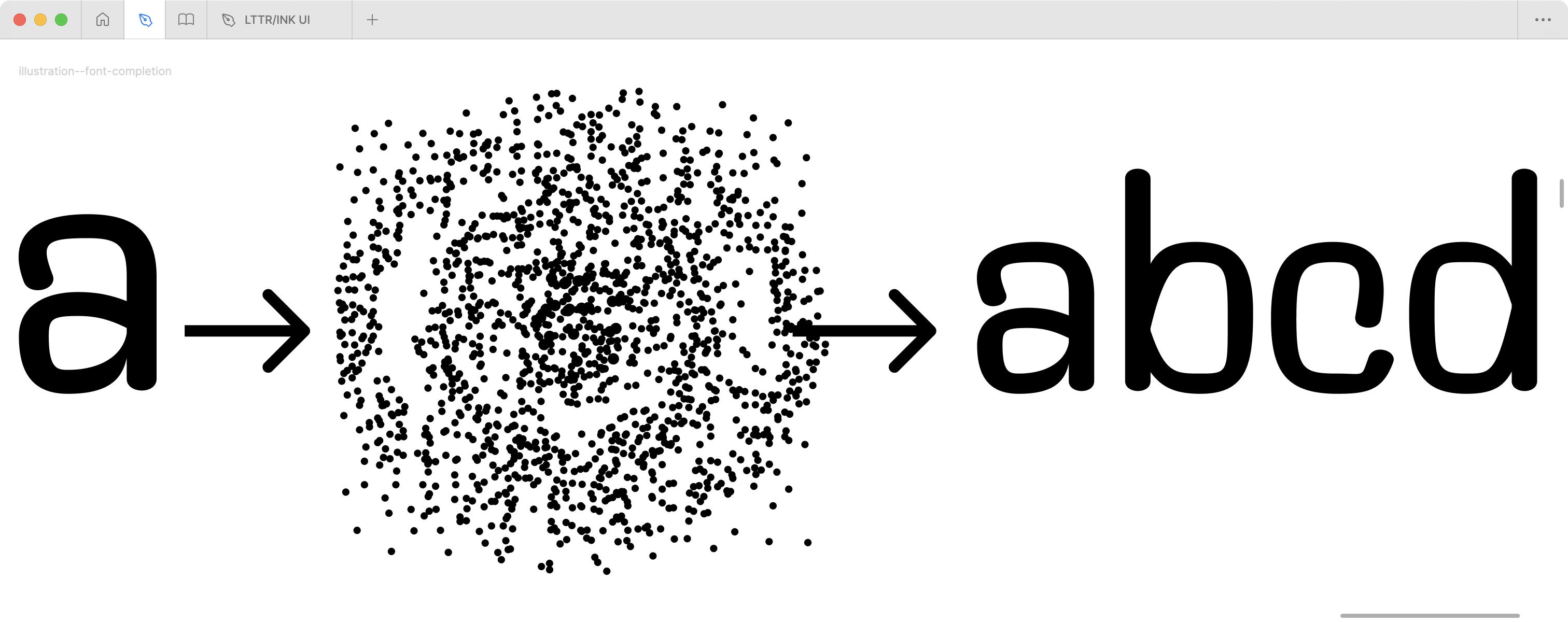

In contrast to the parametric approach, deep learning methods function as sophisticated interpreters of typographic style. These systems are trained through observation and analysis of exemplar fonts, developing an understanding of stylistic features that extend beyond mere calculation. When presented with user-provided reference glyphs, the system draws upon its learned repertoire of styles – and, rather like the Cheshire Cat’s enigmatic appearances, may introduce elements of controlled randomness that spark creative possibilities.

The technical architecture underlying these font completion methods typically employs an encoder-decoder framework augmented with refinement stages and auxiliary modules. This arrangement allows the system to both capture and recreate the essence of letterforms while maintaining stylistic coherence across the generated character set.

During the training phase:

- Encoders are trained to extract glyph shapes and styles from data and encode them into latent representations. These representations capture the essential style and structural information of the glyphs.

- Decoders are trained to generate the whole font by identifying the style from the latent representations derived from reference glyphs. They use these representations to produce glyphs that maintain the style and structure of the reference set.

During the inference phase:

- Encoders extract style features from reference glyphs and encode them into latent representations. These latent representations encapsulate the style information needed for generating new glyphs.

- Decoders aggregate the latent representations from the encoders and use them to generate the entire font set in the style of the reference glyphs.

When looking for a typical spatial representation, it often works with rasterised images in the database, which can be found in a ton of papers. Here, the thesis presents a few notable of them. However, admitting there wasn’t a meticulous method behind the selection. (Jiang et al. 2017) proposed an end-to-end font style transfer system that generates large-scale Chinese font with only a small number of training samples called a few-shot font training stacked conditional GAN model to generate a set of multi-content glyph images following a consistent style from a few input samples, which is one of the few. (Cha et al. 2020) focused on compositional scripts and proposed a font generation framework that employed memory components and global-context awareness in the generator to take advantage of the compositionality. (Aoki et al. 2021) proposed a framework that introduced deep metric learning to style encoders. (Li et al. 2021) proposed a GAN based on a translator that can generate fonts by observing only a few samples from other languages. (J. Park, Muhammad, and Choi 2021) proposes a model using the components of the initial, middle, and final components of Hangul. (S. Park et al. 2021) employed attention system so-called local experts to extract multiple style features not explicitly conditioned on component labels. (Reddy et al. 2021) introduced the deep implicit representation of the fonts, which is different from any of the other projects since their data representation remained in the raster. However, the generation ended with raster images. (Tang et al. 2022) proposed an approach by learning fine-grained local styles from references and the spatial correspondence between the content and reference glyphs. (Muhammad and Choi 2022) proposed a model that simultaneously learns to separate font styles in the embedding space where distances directly correspond to a measure of font similarity and translates input images into the given observed or unobserved font style. (W. Liu et al. 2022) proposed a self-supervised cross-modality pre-training strategy and a cross-modality transformer-based encoder that is conditioned jointly on the glyph image and the corresponding stroke labels. (He, Jin, and Chen 2022) proposed a cross-lingual font generator based on AGIS-Net (Gao et al. 2019).

The prevalence of raster-based approaches in the literature presents a curious paradox. While these methods demonstrate technical sophistication, they fundamentally misalign with the vector-based foundations of professional type design. Like attempting to paint a font a pointillist painting instead of using tools for geometric drawing, these raster-domain solutions, remain unsuitable for practical font production. The abundance of image-based representations in academic literature suggests a concerning disconnect between research directions and industry requirements.

Amidst the raster-dominated landscape, a few notable heroes emerge. These projects distinguish themselves through their capacity to generate authentic vector fonts – or at minimum, vector-based drawings – marking them as particularly relevant to practical type design applications.

FontCycle (Yuan et al. 2021) leverages graph-based representation to extract style features from raster images and graph transformer networks to generate complete fonts from a few image samples. Although the graph-based representation seems to be a prospective approach to encoding spatial information of glyph shapes, the absence of original Bézier curve drawings is a limitation, which makes the system lag behind the other approaches.

DeepVecFont (Yizhi Wang and Lian 2021) works with dual-modality representation that efficiently exploits advances of raster image modality domain and vector outlines suitable for type design. These features make DeepVecFont a hot candidate for font type designers, helping them complete their fonts from initially provided few glyphs.

VecFontSDF (Xia, Xiong, and Lian 2023) calculates quadratic Bézier curves from raster image input by exploiting the signed distance function. Similarly to FontCycle (Yuan et al. 2021), the VecFontSDF (Xia, Xiong, and Lian 2023) system drawback from the industry implementation is that it lacks real vector drawing inputs.

DeepVecFont-v2 (Yuqing Wang et al. 2023) as a descendant of capable DeepVecFont (Yizhi Wang and Lian 2021) improved the limitation of sequential encoding of a variable number of drawing commands by introducing transformers instead of original LSTM. The system is capable of exceptional results, which makes it a good candidate for font completion of complex fonts.

DualVector (Y.-T. Liu et al. 2023), similar to DeepVecFont (Yizhi Wang and Lian 2021) and DeepVecFont-v2 (Yuqing Wang et al. 2023), leverages both raster and sequential encoding. However, the input uses solely raster images of glyphs, which is again unfortunate, as original drawings aren’t used.

VecFusion (Thamizharasan et al. 2023) leverages recent advances in diffusion models to encode raster images and control point fields. However, the loss of vector drawings as input is again drawn back from use in the type design industry.

These rare heroes, including the aforementioned parametric approach (Suveeranont and Igarashi 2010), present intriguing possibilities for type design practice. While their capabilities vary, each system’s approach to font completion suggests potential pathways for integrating machine learning into professional workflows.