3.1.3. Cross-modal Generation

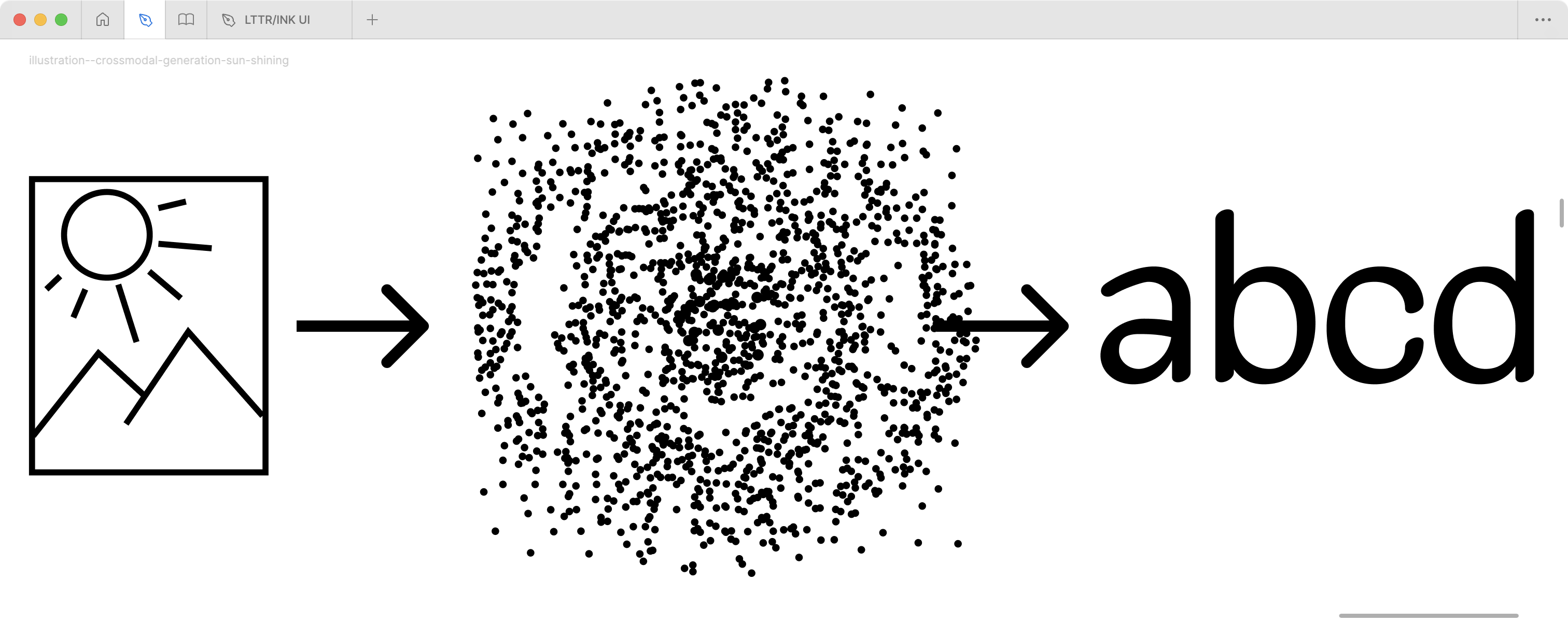

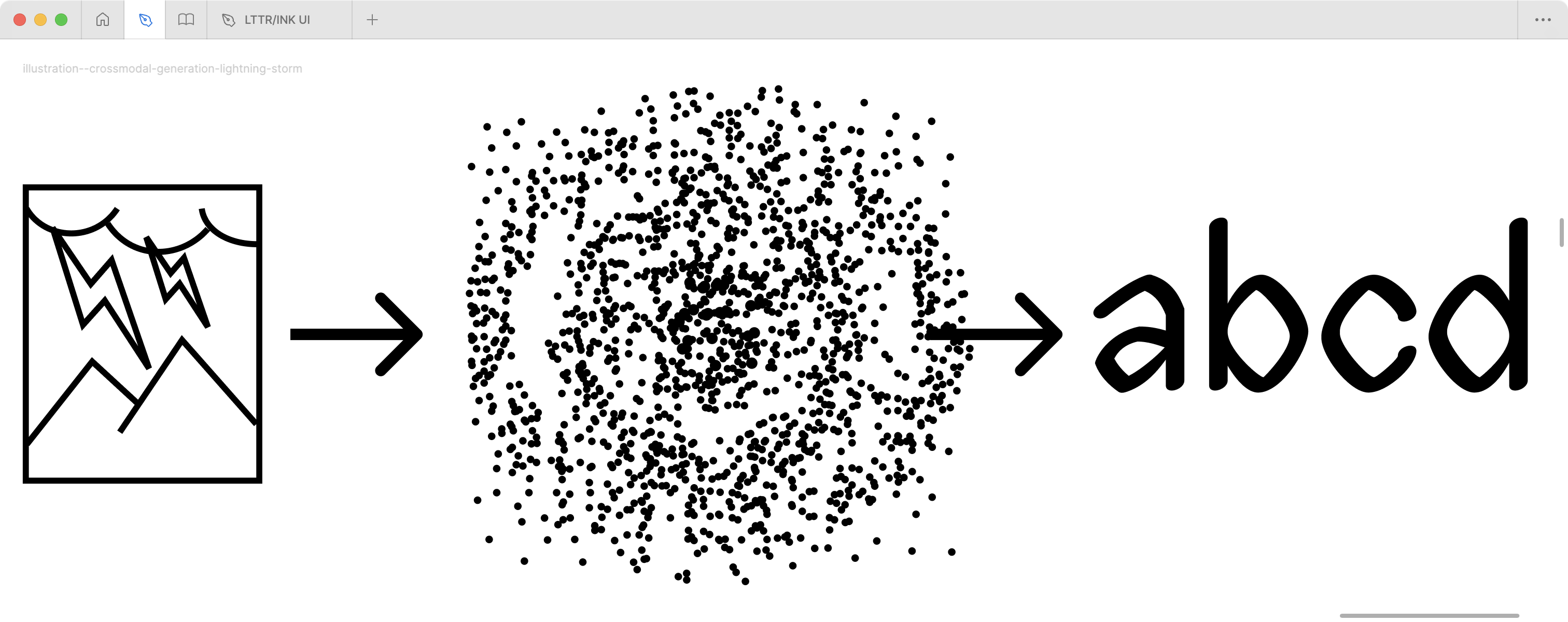

A multimodal or cross-modal generation represents a domain that aims to generate its outputs from different modalities of their inputs. For instance text-to-image, text-to-speech, image-to-text etc. In the font domain, it could be text-to-font, image-to-font. (Chen et al. 2020) ’s proposed framework, based on GANs, enables the generated fonts to reflect human emotional information distinctive from scanned faces. This approach is considered as image-to-font as the input is scanned images, and the output is generated fonts. (Kang et al. 2022) aimed to understand correlations between impressions and font styles through a shared latent space in which the font and its impressions are embedded nearby. This approach is considered text-to-font as the input is impression defined in texts, and the output is generated fonts. (Matsuda, Kimura, and Uchida 2022) aimed to generate fonts with specific impressions with a font dataset with impression labels. Similar to previous work, it is considered as text-to-font as the input is impression defined in texts, and the output is generated fonts. (Ueda, Kimura, and Uchida 2022) tried to analyse the given impression of fonts by training the Transformer network. They didn’t generate fonts. This approach is considered font-to-text as the input is a font, and the output generates text describing the impression.

More details about multimodal representation techniques are provided in the representations chapter. Hold on!